Data Hub Integration Guide

This product guide describes Triple’A Plus (TAP) and Temenos Data Hub(TDH) integration via Data Event Streaming(DES). It allows banks to extract data via subscriptions from TAP and make it available near-real time in TDH, e.g., for enhanced reporting. DES is a highly scalable, elastic, distributed, fault-tolerant application to stream entity events from TAP-Outbox / TMN_DES_EVENT and integrate with TDH. For more information about DES, please refer to the Data Event Streaming.

Data extraction for streaming is supported for:

- TAP core entities

- TAP user-defined entities

- Extension fields (data pre-computation) from (core and user defined) entities

- Search formats

Outbox subscribed events (subscriptions with nature outbox) capture the changes in the respective entities and store the entities data in corresponding outbox tables, from where it is published near real-time to TDH via DES.

The schema is published to the outbox schema table -AAAMAINDB.OUTBOX_SCHEMA and the values are published to the outbox table - AAAMAINDB.OUTBOX.

The OUTBOX_SCHEMA describes the TAP entity with its fields and the related data types, as defined in TAP core. TMN_DES_EVENT, TMN_DES_AVRO_SCHEMA_EVENT are the DES tables created as part of the DES installation process.

Example:

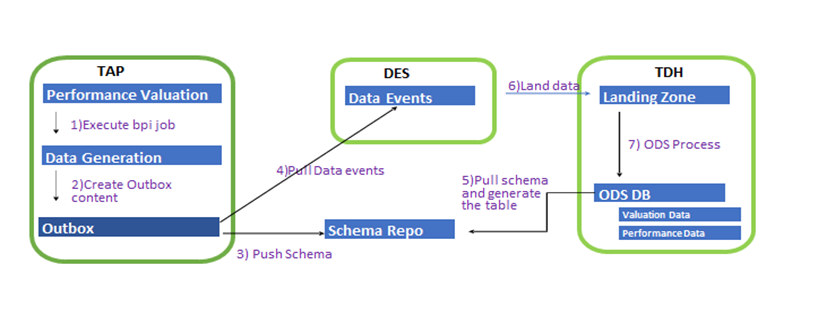

The diagram below describes how performance data calculated in TAP is extracted (Search Data Format) and sent to TDH.

More details related to performance data extraction from TAP to TDH is provided in section 4.

Process Overview

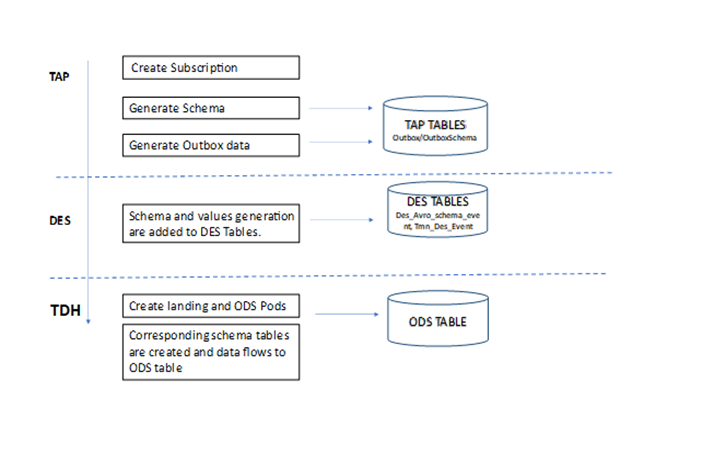

The data extraction from TAP for streaming is based on subscriptions. Subscriptions can be created with or without formats. The diagram below visualizes the steps involved in the Data Hub data integration via DES.

In terms of data extraction, an entity's full content or a specific set of attributes of an entity can be defined depending on a bank´s needs. Extended attributes and user-defined fields in an entity could be included.

If pre-computed data is also in scope of data extraction the corresponding TSL precomputation jobs must be defined and executed to have all data available before the schema is generated in the outbox schema table.

Configuration

The TAP system parameter DES_ENABLED value must be set to 1 to enable the data flow into the outbox table. The default value of this parameter is 0. DES must be installed and running for processing. For detailed DES configuration, please refer to the Configuring DES guide.

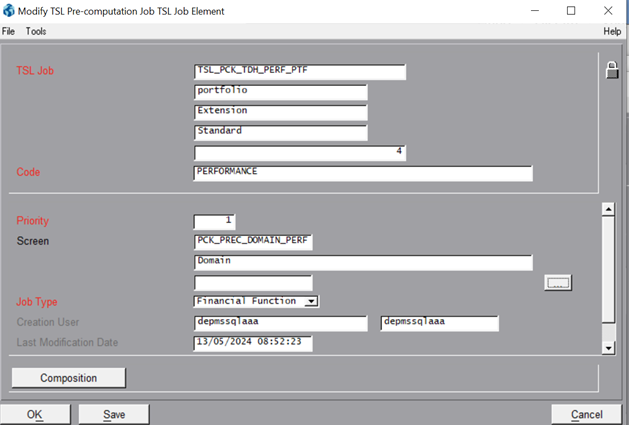

Create a TSL pre-computation job of nature extension (please refer to the FrontOfficeWeb_TSL_OperatingGuide.pdf for detailed information).

In the Precomp job Element composition, add the relevant search data formats.When Search data formats and extended attributes are in data extraction scope, only attributes with enabled outbox_publish_e field mapping must be enabled. So, the dict_attribute of outbox_publish_e flag must be set to value 1 to extract all format elements. Then, install_ddl is to be executed to propagate these changes to the meta dictionary.- Schema creation via otf-shell : The schema describes the data and formats for extraction. To create it, execute the otf shell command in the desired business entity and perform the command update-outbox-schema to generate the schema in the TAP outbox schema table.

- DES – TDH Mapping: In the TDH application, create the Landing and Operational Data Store(ODS) application pods as follows.

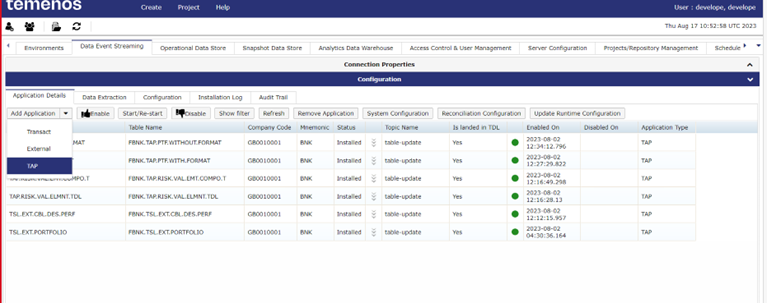

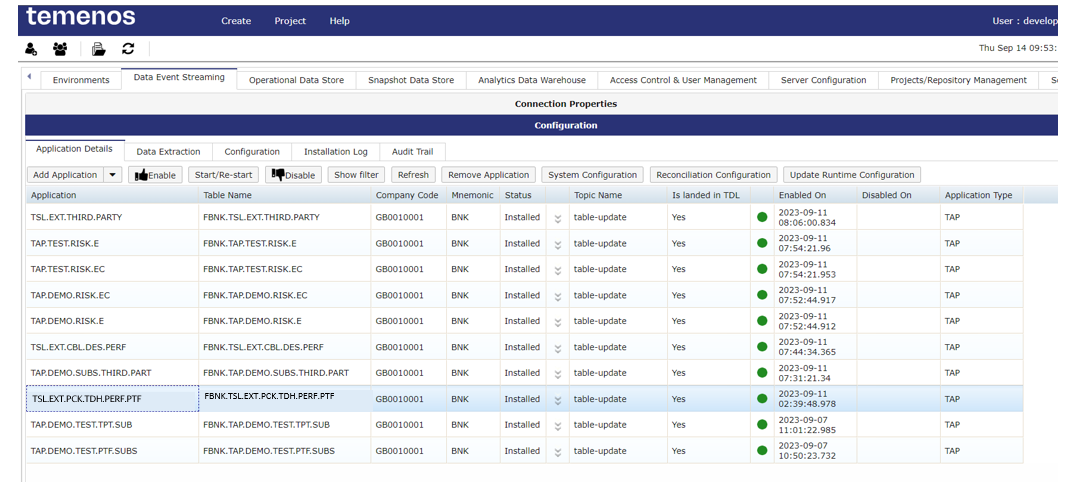

- Navigate to the Data Event Streaming or Configuration tab and then use Add Application to add TAP, as shown in the below screen:

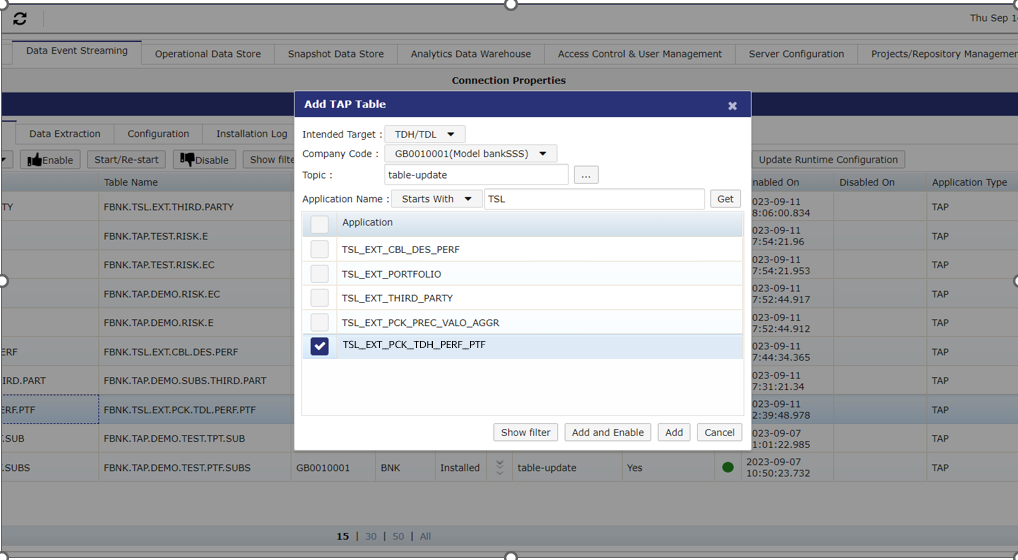

A pop-up window appears. Enter the Company Code, Topic, Application Name and then click “Add and Enable” button to add a record in the application as shown below.

Navigate to the Operational Data Store card and create the ODS application by clicking the “Add” button. Select the corresponding Landing application and set the application status to Enabled.

A dedicated BPI job associated with the enabled state will generate the values in the outbox table. If DES is up and running, the data flows to the corresponding DES tables:

- TMN_DES_AVRO_SCHEMA_EVENT

- TMN_DES_EVENT table.

In the ODS (Operational Data store) database, a corresponding table is created and outbox data flows to the ODS table.

- Navigate to the Data Event Streaming or Configuration tab and then use Add Application to add TAP, as shown in the below screen:

As for other attributes, extended attributes in the scope of data extraction must be flagged accordingly. To do so, follow the below steps:

- Update the extended attributes to be included in the schema (enable the outbox_publish_mapping for these attributes).

- Run the command in update-outbox-schema in otf-shell to generate the schema in the outbox_schema table.

- Create the Landing and ODS application pods in TDH as explained in the previous section.

- The corresponding bpi job generates values in outbox table and ODS DB.

Below steps are to be completed:

- Create a subscription for an entity (e.g., portfolio, third party etc.) with or without format(s) in the GUI.

-

To create a subscription:

- Navigate to Administration> Configuration >Subscription.

- Select subscription nature as outbox.

- Run the command in update-outbox-schema in otf-shell to generate the schema in the outbox_schema table.

- Create the Landing and ODS application in TDH.

- Any insert or modification of an entity with linked subscription creates an outbox entry, and the related data flows to the ODS DB in TDH.

Packaged Integration : Performance Data Extraction

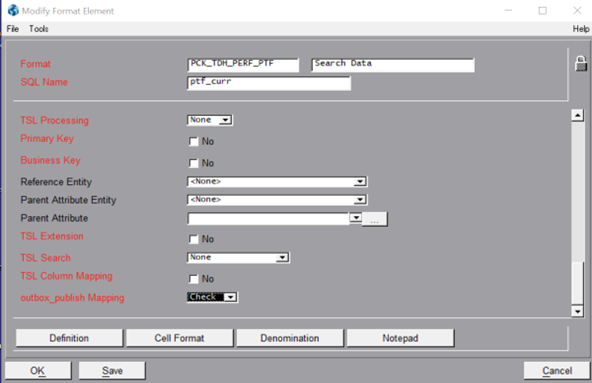

This section describes in detail the packaged performance data extraction as a reference example for this new integrated feature. Applied format is PCK_TDH_PERF_PTF (Search Data Format).

The following steps describe how performance data calculated in TAP is sent to TDH:

- Select the search data format - PCK_TDH_PERF_PTF (included in the standard package).

-

The standard PCK_TDH_PERF_PTF format includes the necessary fields enabled for outbox publishing. Data extraction can be enabled or disabled by editing the relevant format elements. Set the outbox_publish Mapping to Check for the data to be extracted, as demonstrated in the screenshot below:

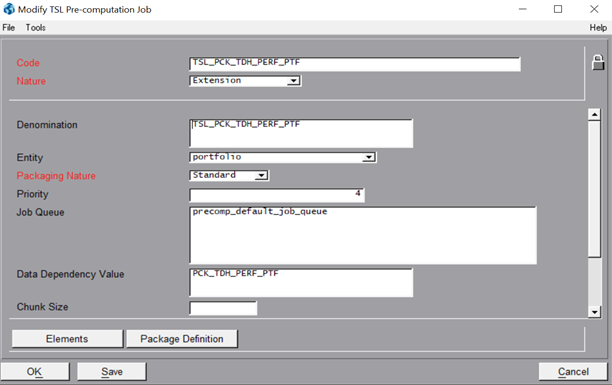

- Create the relevant TSL pre-computation job with nature “Extension” and specify the data dependency value to link to the related bpi job. For further details, refer to the FrontOfficeWeb_TSL_OperatingGuide.pdf .

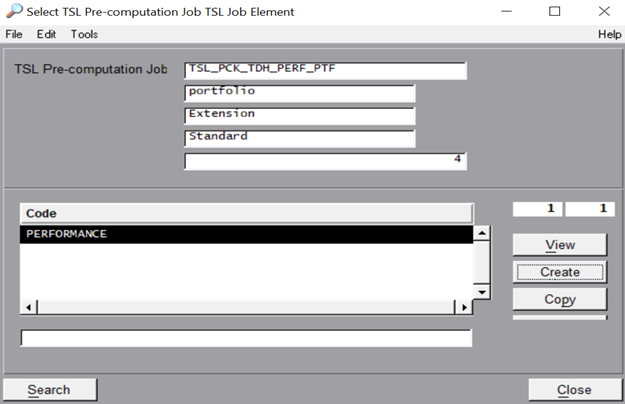

- Click on Elements to create or add the relevant elements.

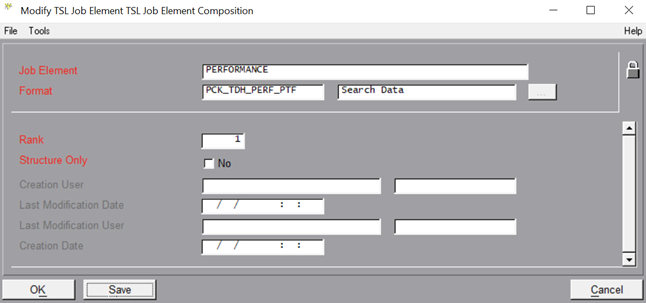

- Edit Composition to add the related performance format(s) applied to extract the data.

- As described in Configurations section, execute install_ddl utility, update update-outbox-schema, and create the Landing and ODS applications in TDH.

- Execute the BPI job as shown below:

submit-bpi-job -D entity=portfolio -D entityDimE=1 -D dataDependencyParamValue=CBL-D entityDimCode=T_AI_PTF11 -t 7200 -w tsl-precomp-entity-job.xml

entityDimE = 1 - Single Portfolio

entityDimE = 2 - Portfolio List

entityDimCode - PortfolioCode/PortfolioListCode

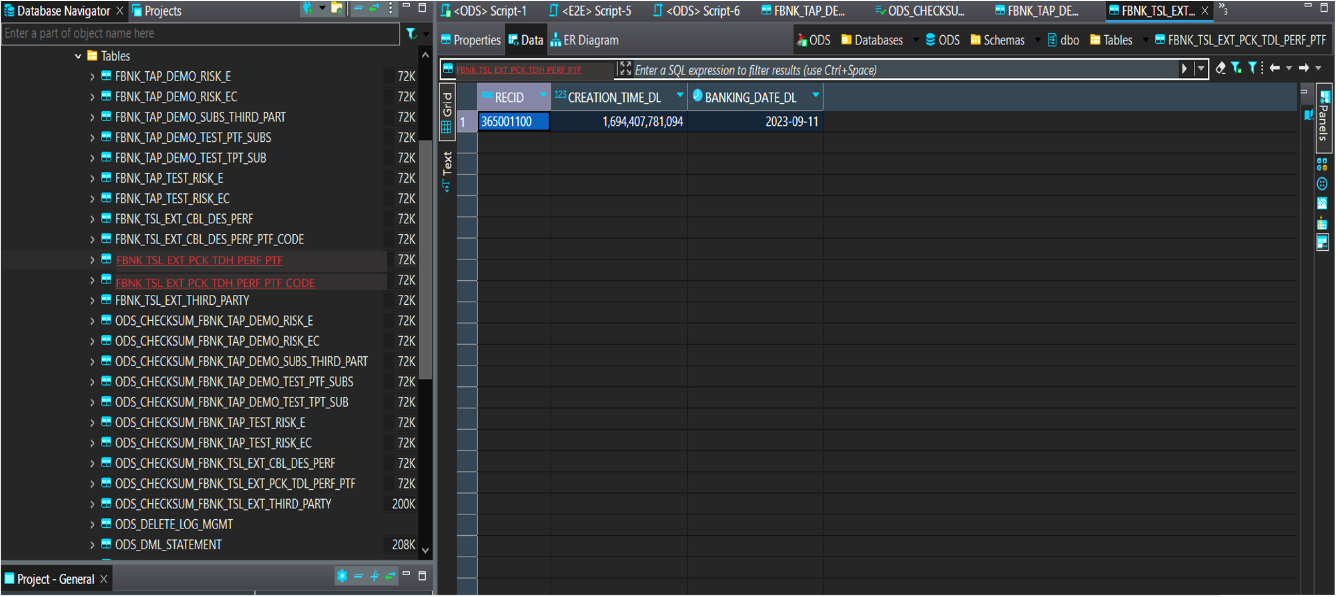

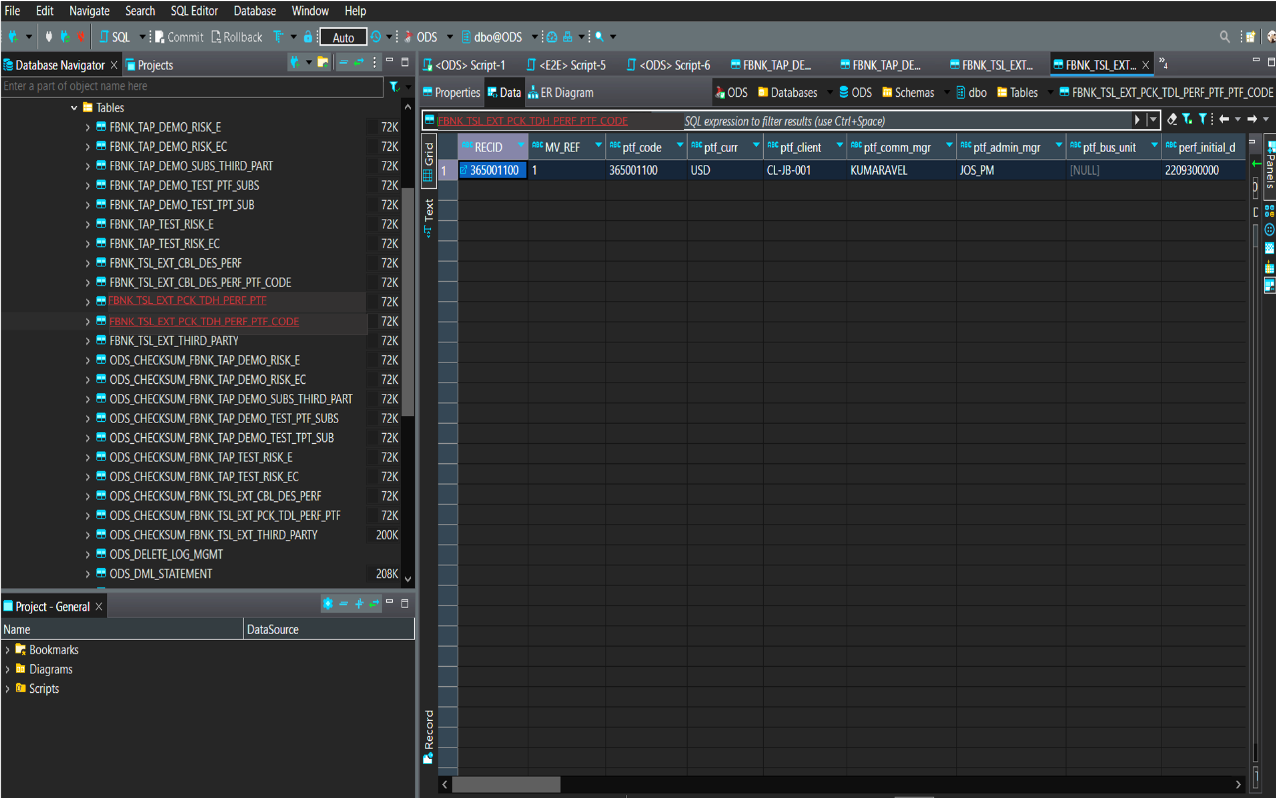

- Performance data is calculated, extracted, and created in the TDH ODS /dbo schema:

In this topic